Imagine an AI that doesn’t just act brainy, but actually works in a way that mirrors the human brain.

That’s the bold claim coming from a team of Chinese researchers, who say they have built the world’s first truly “brain-like” large language model.

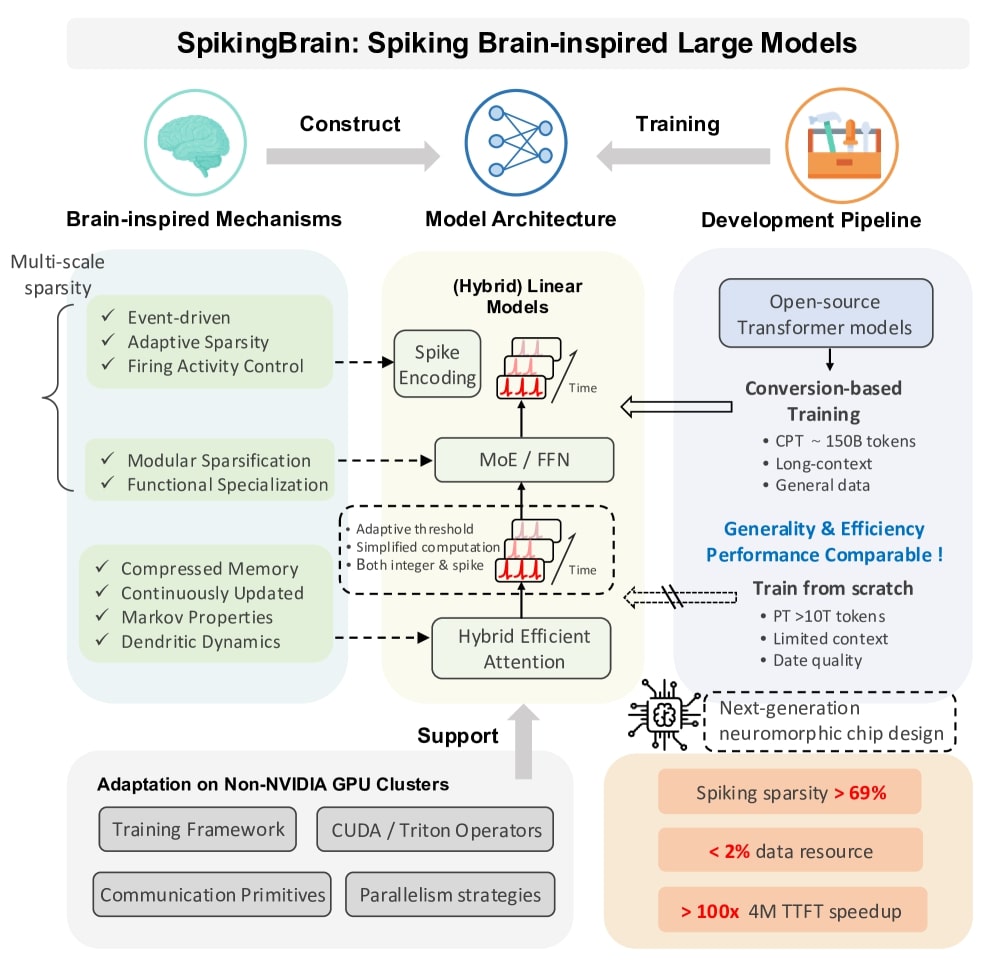

The project, called SpikingBrain1.0, has been presented as a breakthrough in artificial intelligence that could make powerful models faster, leaner, and less dependent on massive amounts of data.

According to The Independent, the scientists behind it argue their system is 25 to 100 times faster than conventional models under certain conditions while requiring only a fraction of the usual training data.

Most popular AI systems today, like ChatGPT, work by brute force. They are trained on massive amounts of text using supercomputers with powerful GPUs. It works well, but it also costs huge amounts of energy and money.

SpikingBrain1.0 tries to work more like a human brain. Instead of analyzing every single piece of input at once, it “fires” only the parts it needs. Think of it like your brain: you don’t process every detail in a book at once you focus only on the words and sentences you need in the moment.

Here’s a simple example:

-

If you ask a regular AI model, “What sound does a cat make?” it will scan the entire question as a whole, looking at all the words equally.

-

SpikingBrain1.0, on the other hand, pays most attention to the nearby and most relevant words, in this case, “cat” and “sound.” It doesn’t waste effort on less important words like “what” or “does.”

By doing this, the system can train faster and with less data. According to Research paper the team managed to use less than 2% of the training data that typical models need, yet still achieved results they call “comparable.”

Contents

Built on Home-Grown Hardware

Another striking detail is the hardware. Instead of running on Nvidia’s high-end GPUs—which have become a global choke point for AI development, SpikingBrain1.0 operates on a Chinese-designed chip platform called MetaX.

This is significant not just from a technological perspective but also from a geopolitical one.

As the U.S. and China compete for dominance in artificial intelligence, developing models that can thrive on domestic hardware could give Beijing an edge while reducing dependence on Western technology.

Why It Matters

If the team’s claims hold up, SpikingBrain1.0 could be a major leap forward for efficiency in AI research and deployment.

-

Speed: Up to 100× faster in some cases.

-

Lower Costs: Reduced energy use and compute requirements.

-

Smaller Datasets: Needs far less data to train.

-

Hardware Independence: Runs on Chinese chips, sidestepping GPU shortages.

For companies, governments, and startups struggling with the high cost of training large AI systems, these improvements could make advanced AI more accessible.

The Catch: Bold Claims, Limited Proof

As exciting as the announcement sounds, there are still reasons to be cautious. First and foremost, the work has only appeared as a preprint on arXiv, meaning it has not gone through peer review. Without outside validation, it’s difficult to know whether the performance numbers are truly reliable.

Second, the claim that SpikingBrain1.0 performs “comparable” to mainstream models is vague. Comparable on what benchmarks? On which tasks? And does the efficiency gain come at the cost of accuracy in areas like long-form reasoning or global context?

There’s also the question of scalability. Local attention is efficient, but some AI tasks such as understanding long documents or maintaining consistency across large contexts require global attention.

Whether this new architecture can handle those challenges remains to be seen.

Still, even if SpikingBrain1.0 turns out not to be a magic bullet, the project reflects a broader trend in AI: the search for smarter, more efficient architectures.

As The Independent notes, researchers worldwide are experimenting with “brain-inspired” designs that promise to break away from the sheer-scale approach of today’s models.

If validated, this technology could reshape the economics of AI, allowing powerful systems to be trained without billion-dollar supercomputers. And if it falters, it will still add valuable insights into how alternative neural architectures might evolve.

Conclusion

SpikingBrain1.0 may be more hype than revolution for now. But the fact that researchers are exploring brain-like designs, specialized chips, and efficiency over size points to a clear shift in the AI race.

Whether or not this model lives up to its promise, one thing is certain: the future of artificial intelligence won’t just be about making models bigger. It will be about making them smarter, leaner, and closer to the way our own brains work.