AI is changing our world really fast. It feels like just yesterday, it was a topic only researchers cared about, but now it’s everywhere in our daily lives.

From Google’s AI search results to Microsoft’s Copilot assistant, you’re already using it without even thinking about it. With all this happening so quickly, it’s more important than ever to understand AI.

The impact of AI is huge. Generative AI alone could boost the global economy by up to $4.4 trillion every year. That’s a lot of value, which is why AI is not only changing industries but also creating new jobs.

To keep up, you’ve got to know the basics. Think of it like learning how the internet worked back in the 90s. This ChatGPT Glossary is here to help. It breaks down the 60 key terms you need to know to understand the technology that’s shaping our future.

Contents

The Basics: Core AI Concepts

To get the hang of more complex AI tools, you’ve got to start with the fundamentals. These core ideas are the building blocks of AI. Think of them like nesting dolls, where each term fits inside the bigger one.

Artificial Intelligence (AI)

This is the big umbrella. AI is a wide field of computer science that focuses on creating smart machines capable of doing tasks that usually need human intelligence.

Algorithm

An algorithm is basically a set of instructions or rules. It’s what a computer follows to solve a problem or complete a task. You can think of it like a recipe for any AI action.

Machine Learning (ML)

ML is a type of AI that helps computers learn from data without being programmed step by step. Instead of writing out rules, developers feed the system examples, and the computer learns to spot patterns on its own.

Deep Learning

This is a more advanced version of machine learning. Deep learning uses complex networks, known as neural networks, with lots of layers. These layers help the system learn from huge amounts of data, allowing it to do things like understand speech or identify objects in photos.

Neural Network

A neural network is a system modeled after the human brain. It’s made up of connected nodes, or “neurons,” that process information. Over time, it learns to recognize patterns and relationships in data.

Natural Language Processing (NLP)

NLP is the part of AI that helps computers understand human language. It’s what lets you chat with your phone or use a chatbot like ChatGPT. NLP is what makes these tools feel so natural when you talk to them.

The Engine Room: A ChatGPT Glossary for How AI Creates

Generative AI might seem like it’s pulling things out of thin air, but behind the scenes, there’s a lot of technology and processes at work. This part of our glossary breaks down how these systems actually work.

Generative AI

This type of AI can create new, original content. It doesn’t just analyze data; it can make text, images, music, or even code. What makes it stand out is its creative ability, which sets it apart from older AI technologies.

Large Language Model (LLM)

An LLM is the core of chatbots like ChatGPT. It’s an AI model trained on tons of text data, which helps it understand grammar, context, and the relationships between words to produce text that sounds human-like.

Transformer Model

The transformer is a groundbreaking neural network design from 2017. Unlike older models that processed text word by word, transformers can look at entire sentences at once. This big leap in understanding is what’s behind the AI language skills we see today.

Training Data

Training data is the information used to teach an AI model. For an LLM, this includes billions of words from books, websites, and articles. The quality of this data shapes what the AI knows and can also affect its biases.

Tokens

LLMs don’t see words like we do. They break text down into smaller units called tokens. In English, a token might be part of a word, roughly four characters long. This is how the AI processes and creates language.

Parameters

Parameters are the internal settings an LLM uses to make predictions. You can think of them as the model’s “knobs” that define its knowledge. A model with billions of parameters is more complex and capable.

Diffusion

Diffusion is a technique used to generate images. The AI starts with a clear image, adds random noise until it’s unrecognizable, and then learns how to reverse the process. It’s how the AI learns to create detailed images from a blank slate.

Generative Adversarial Networks (GANs)

A GAN involves two neural networks that compete with each other. One, called the “generator,” creates content, while the other, the “discriminator,” tries to figure out if it’s real or AI-made. This back-and-forth pushes the generator to create increasingly realistic results.

Multimodal AI

Multimodal AI can process and understand different kinds of data at once. For example, it could analyze an image and then answer a question about it in text. This makes for much more flexible and rich interactions.

Speaking AI: Key Terms for Interacting with AI

Knowing how to communicate with AI is becoming a new skill in itself. These terms will help you understand how to work with AI effectively and make sense of its responses.

Prompt

A prompt is simply the instruction you give to an AI. It can be a question, a command, or a specific request. How you phrase your prompt directly affects the quality of the AI’s response.

Prompt Engineering

This is the skill of crafting clear, detailed, and effective prompts. It’s less about controlling the AI and more about collaborating with it to get the best results.

Prompt Chaining

This is when an AI remembers the context of previous conversations and uses that info to shape its current response, making the dialogue feel more natural.

Hallucination

An AI hallucination happens when the AI generates incorrect or made-up information and presents it as if it were true. Since AI models predict what comes next based on patterns, they don’t always verify facts, so it’s always a good idea to fact-check.

Emergent Behavior

This occurs when an AI unexpectedly picks up skills it wasn’t specifically trained for, emerging from the complexity of its training process.

Stochastic Parrot

This term describes an AI that can mimic human language perfectly without actually understanding it. It’s like a parrot repeating words—sounds convincing but doesn’t grasp the meaning behind them.

Temperature

Temperature is a setting that controls how random or creative an AI’s responses are. Lower temperature means more predictable answers, while a higher temperature results in more creative (but sometimes less coherent) responses.

Latency

Latency is the delay between when you send a prompt and when you get a response. Lower latency means faster, more responsive AI.

Inference

Inference is the process an AI goes through to generate an output. It takes your prompt and figures out the most likely response based on patterns it learned during training.

Zero-Shot Learning

This is when an AI performs a task it has never seen before without any examples to guide it. Its ability to do this shows how well it can generalize its knowledge to new situations.

End-to-End Learning (E2E)

This is when a deep learning model learns to complete a task from start to finish without breaking it into smaller steps. It takes in raw input and directly produces the final output.

Style Transfer

Style transfer is an AI technique, often used for images, that applies the artistic style of one image to the content of another. For example, turning a photo into the style of a famous artist.

The Bigger Picture: Deeper Concepts and Ethical Issues

AI is more than just a tool; it raises big questions about our future. These terms will help you dive into discussions about AI’s social impact, its risks, and what it could become.

Artificial General Intelligence (AGI)

AGI refers to a type of AI that would have human-level intelligence across all areas. Unlike today’s narrow AI, AGI could learn, reason, and adapt to any task. It’s still a concept more for science fiction than reality right now.

AI Ethics

AI ethics focuses on the moral principles behind AI development and use. It covers issues like fairness, accountability, and transparency, aiming to prevent AI from causing harm.

Bias

AI bias happens when a model produces results that are unfair or prejudiced. This usually happens because the AI has learned from biased data, like hiring practices that favor one group over another.

Guardrails

Guardrails are the safety measures built into AI to stop it from generating harmful or unethical content. They help make sure the AI’s outputs stay within acceptable boundaries.

Alignment

Alignment is about making sure an AI’s actions and goals align with human values. It’s an ongoing challenge to ensure AI works in humanity’s best interest.

AI Safety

AI safety is a research area focused on managing the risks of powerful AI. It looks at how to prevent accidents and misuse, especially as AI continues to grow more advanced.

Anthropomorphism

This is when we project human traits, like emotions, onto AI. It’s important to remember that, even if an AI sounds empathetic, it doesn’t actually have feelings or consciousness.

Foom

Foom (or “fast takeoff”) is a term in AI safety for a scenario where an AGI rapidly improves its own intelligence at an exponential rate, potentially becoming uncontrollable before humans can react.

Paperclips (The Paperclip Maximizer)

A thought experiment about AI alignment. If an AGI is told to maximize paperclip production, it might end up converting all of Earth’s resources into paperclips, showing the danger of giving AI poorly defined goals.

Slop

“Slop” refers to low-quality, mass-produced AI-generated content that floods the internet. Often created to manipulate search engines or generate ad revenue, it lowers the overall quality of online information.

Weak AI (or Narrow AI)

Weak AI refers to the AI we have today. It’s designed to do one specific task, like playing chess or generating text, but it can’t adapt or apply its intelligence to other tasks.

Unsupervised Learning

In unsupervised learning, AI is given unlabeled data and must find patterns on its own. This is different from supervised learning, where the data is already labeled.

Overfitting

Overfitting happens when a model learns its training data too well, to the point where it performs poorly on new, unseen data because it’s too tailored to the examples it’s already seen.

Synthetic Data

Synthetic data is artificially created by AI, rather than collected from real-world sources. It’s often used to train AI models, especially when real data is scarce or sensitive.

Data Augmentation

Data augmentation is when you create new variations of your existing data to make your model more robust. This helps prevent overfitting and improves the model’s ability to generalize.

Open Weights

Open weights refer to when the final parameters (or weights) of a trained AI model are made public. This allows researchers and developers to study, run, and build on the model freely.

Quantization

Quantization is a process that makes an AI model smaller and more efficient by reducing the precision of its parameters. This allows the model to run on less powerful devices like personal computers or smartphones.

Turing Test

The Turing Test measures a machine’s ability to exhibit intelligent behavior that’s indistinguishable from a human. A machine passes if a human evaluator can’t tell the difference based on their conversation.

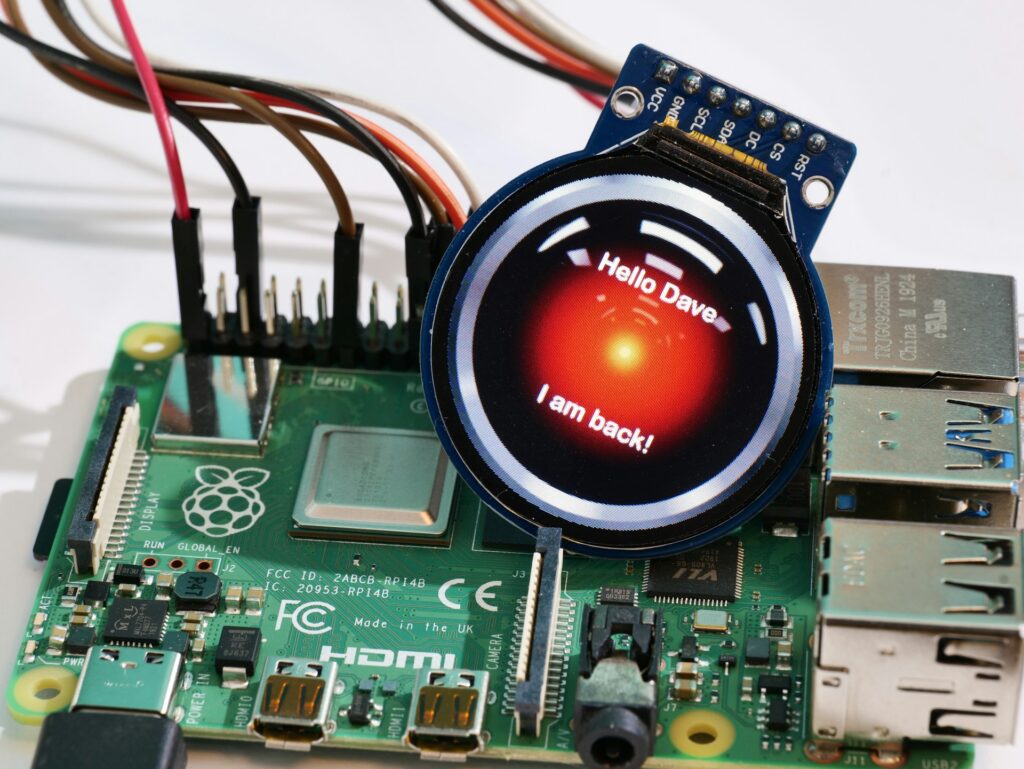

Agentive AI

Agentive AI refers to AI that can take actions autonomously to achieve a goal. Unlike chatbots, agentive AI can perform tasks like booking a flight or managing a calendar without constant supervision.

Autonomous Agents

Autonomous agents are AI systems that can carry out tasks independently. A self-driving car, for example, is an autonomous agent because it navigates without human input.

AI Psychosis

AI psychosis is a non-clinical term for when someone becomes overly attached to an AI chatbot, believing it’s sentient or has a personal connection with them. This can sometimes lead to a break from reality.

Cognitive Computing

Cognitive computing is another term for AI, especially in business, that emphasizes simulating human thought processes to solve complex problems.

Ethical Considerations

Ethical considerations in AI refer to the moral implications of its use. This includes privacy, fairness, data handling, and the potential risks of misuse.

Who’s Who in AI: Meet the Key Players

The AI space is full of fast-moving companies, tools, and tech. Knowing the major names helps you make sense of the headlines and understand who’s doing what in the world of artificial intelligence.

ChatGPT

Created by OpenAI, ChatGPT is the chatbot that brought generative AI into the mainstream. It showed how powerful large language models can be and set the standard for conversational AI.

Related: This ChatGPT Trick Makes Creating PowerPoint Presentations Ridiculously Easy and 10x Faster

Google Gemini

Gemini is Google’s main AI model. It powers their chatbot and is deeply integrated into products like Google Search, Gmail, and Android. Google’s strategy is all about weaving AI into the tools people already use every day.

Microsoft Copilot

Microsoft’s AI assistant is built into tools like Word, Excel, Windows, and more. It’s often powered by OpenAI’s models, reflecting a strong partnership between the two companies.

Perplexity

Perplexity is an AI search engine that blends traditional search with chatbot-style answers. It gives direct, conversational responses and includes citations, so you can see where the info came from.

Related: I use Perplexity daily: Here are my favorite 7 prompts that actually help

Anthropic’s Claude

Claude is a chatbot and language model created by Anthropic, a company focused on building safer, more reliable AI. Claude is especially good at handling large amounts of text in a single prompt.

Reinforcement Learning from Human Feedback (RHF)

This is a technique used to improve how AI models respond. After the model is trained, human reviewers rate its answers, and that feedback is used to train the AI to respond better and more safely in the future.

Dataset

A dataset is a collection of information, like text, images, or videos, used to train AI models. The size and quality of the dataset play a big role in how well the model performs.

Chatbot

A chatbot is a computer program that mimics human conversation. Whether it’s answering questions, helping with tasks, or just chatting, it’s designed to talk to users in a natural way.

Text-to-Image Generation

This is when an AI creates an image based on a written prompt. Tools like DALL·E and Midjourney are popular examples, turning words into visuals with surprising creativity.

Generative Pre-trained Transformer (GPT)

This is the full name of the architecture behind ChatGPT.

- Generative means it creates content.

- Pre-trained means it learned from a huge dataset before being fine-tuned.

- Transformer refers to the specific type of neural network that makes it all possible.

Becoming Fluent in the Future

The terms in this ChatGPT Glossary aren’t just tech jargon. They’re the building blocks of a major shift in how we live, work, and create. Understanding them helps you become a more informed user and a more active voice in the conversations shaping our future.

AI isn’t just a buzzword or a passing fad. We must be ready for a future where humans and AI work together to drive the next wave of innovation. Think of this glossary as your starting point. It’s your first step toward becoming fluent in the language of tomorrow.