November 18, 2025 is going to be one of those dates people talk about for years.

Just when it felt like the AI world was slowing down a bit, Google came out of nowhere and changed the entire conversation.

Meet Gemini 3 — the model that didn’t just raise the bar. It punted it straight into orbit.

Introducing Gemini 3 ✨

It’s the best model in the world for multimodal understanding, and our most powerful agentic + vibe coding model yet. Gemini 3 can bring any idea to life, quickly grasping context and intent so you can get what you need with less prompting.

Find Gemini… pic.twitter.com/JI7xKkAZXZ

— Sundar Pichai (@sundarpichai) November 18, 2025

If you’ve felt like your current AI tools are starting to plateau, you’re not imagining it. We’ve all learned to work around their quirks, their blind spots, and the little ways they miss the mark.

But Gemini 3 is different.

This release brings huge leaps in reasoning, coding, and search integration. It’s not a mild improvement. It’s a full-on reboot of what an AI assistant can be.

And it’s positioned to leave ChatGPT looking a little… slow.

Let’s break down why Gemini 3 matters, and why you’ll want to pay attention.

Contents

The Brains Behind the Operation

The first thing you notice with this new model is how it thinks.

Not how fast it spits out facts. How it actually reasons.

We are not talking about copy-paste answers or shallow summaries.

We are talking about real problem-solving.

The kind where the AI can untangle a messy situation, look at it from multiple angles, and give you something that feels… considered.

Older models struggled the moment you asked them to “show their work” or deal with fuzzy instructions.

They would guess, hallucinate, or quietly dodge the hard parts.

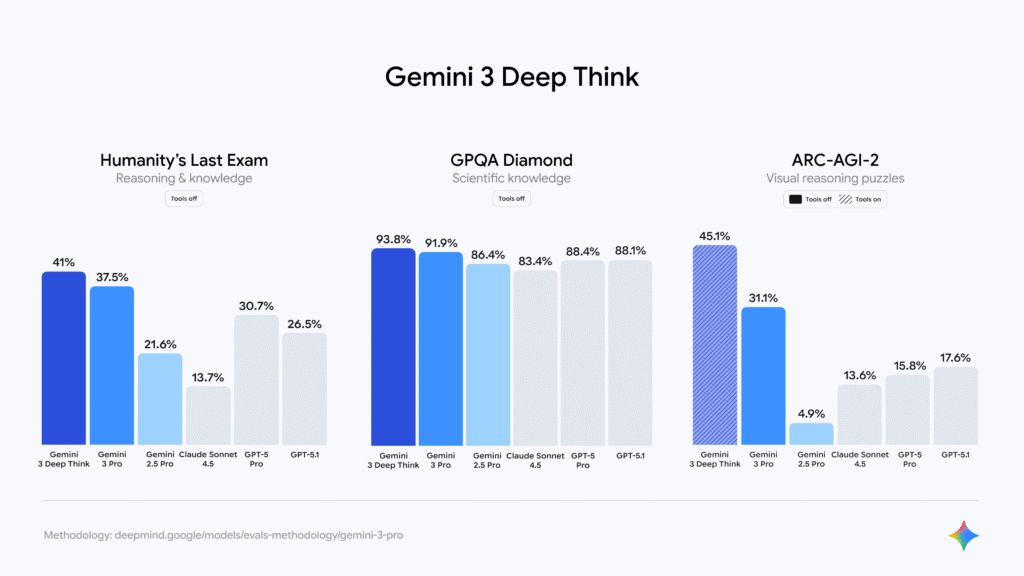

Gemini 3 changes that with what Google calls its new “Deep Think” ability.

In plain language, it can pause, explore different options, and work through a tough question step by step before it replies.

It feels less like a parrot and more like someone who actually understands the material, not just the notes.

Picture this.

You ask an AI to help you plan a cross-country move.

You care about cost, good school districts, and reasonable commute times.

A standard model might give you a generic checklist:

“Book movers, change address, research schools.”

Gemini’s new brain does something else.

It weighs trade-offs between neighborhoods. It looks at commute patterns.

It even suggests ideal moving dates based on historical traffic and price trends.

It does not just answer. It actually helps you decide.

You see this same boost in how it uses Google Search behind the scenes.

Instead of running one simple query, it breaks your question into many smaller ones.

It fans them out, grabs what it needs in parallel, then stitches everything back together into one clear, useful response.

It is less like using a single search bar. And more like having a small research team working for you at once.

The End of the Ten Blue Links

Speaking of search, let’s be honest — search has been a chore for years.

You type in a simple question and end up wading through SEO junk, ads disguised as articles, and pop-ups begging for your email. It’s exhausting.

Gemini 3 changes that in a way that finally feels sane.

Google has baked the model right into Search with what they’re calling “AI Mode.” And for everyday users, this might be the biggest quality-of-life upgrade of all.

The star of the show is something Google calls the Generative UI.

This isn’t the old “here’s a paragraph of text” approach.

The AI can actually build things for you (LIVE, on the page) based on what you need.

Say you’re looking into mortgage options.

Normally, you’d get bounced around to bank websites and calculators that never quite match your situation.

In AI Mode, Gemini doesn’t send you anywhere.

It just builds a custom mortgage calculator right in your search results.

It uses the details you gave — income, interest rate, down payment — and pre-fills everything for you. You can nudge the sliders, adjust the numbers, and see the full picture instantly.

Or take something like trying to understand the three-body problem in physics.

Instead of pointing you to a long Wikipedia article, Gemini can generate a tiny interactive simulation right there. You drag planets around. You tweak mass and gravity. You see how the whole thing behaves in real time.

It’s no longer a list of websites.

It’s a living, adaptive information engine that shapes itself around exactly what you’re trying to solve.

Search finally feels less like searching… and more like getting answers.

A Developer’s Dream Come True

If you write code, whether it’s your day job or your weekend hobby, this is the part that’s going to knock you flat.

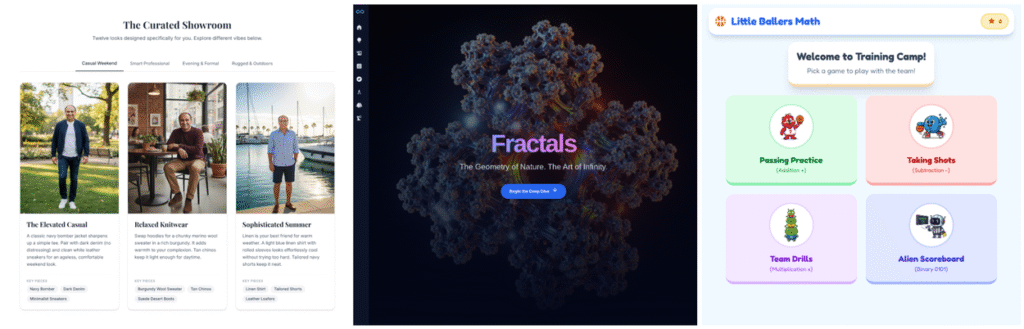

Google is calling it “vibe coding,” and it feels less like a feature and more like a glimpse of the future.

At its core, vibe coding means you can build real, functional apps using nothing but natural language… but with a level of accuracy that feels unreal.

In one demo, someone literally scribbled an app idea on a napkin, snapped a photo, and uploaded it.

Gemini didn’t just say, “Nice drawing.”

It wrote the HTML, CSS, and JavaScript, and turned that sketch into a working web app in seconds.

This works because Gemini 3 isn’t acting like a text generator anymore.

It behaves like an agent, something that plans, revises, and executes tasks step-by-step.

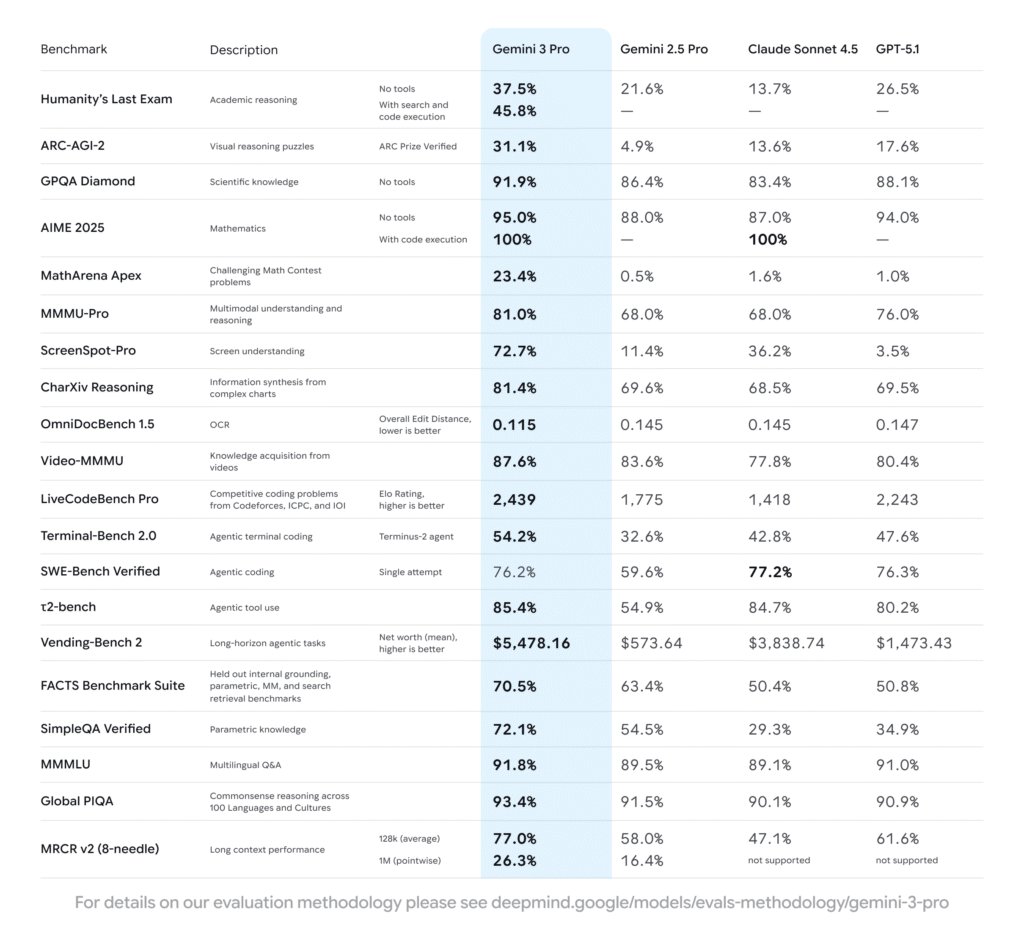

It crushes older models like Gemini 2.5 Pro on every coding benchmark.

On Terminal-Bench 2.0, the test that measures how well an AI can use a computer like a human, it scored 54.2%, which is absurdly high for an AI agent.

For developers, the real kicker is Google Antigravity, the new platform built around these agentic abilities.

Think of it as an environment where you set the vision, and your AI “team” handles the tedious work:

- debugging

- refactoring

- navigating your file system

- updating multiple files at once

- building entire components automatically

It’s no longer pair programming.

It feels more like managing a small group of tireless AI engineers who don’t complain, don’t get tired, and don’t introduce merge conflicts at 2 a.m.

If you’ve ever wished coding felt lighter, faster, or just more fun… this is that moment.

Also Read: AI Billionaire’s Advice to Teens: Start Mastering ‘Vibe Coding’ with These 10 Advanced Prompts

Seeing Is Believing: Next-Level Multimodal Understanding

We can’t talk about Gemini 3 without talking about how well it sees and hears the world.

Most AI models treat images, audio, and video like side quests, things they can sort of handle if you push them hard enough.

Gemini 3 was built differently.

It was designed from the ground up to take in information the same way we do: all at once.

This is Gemini 3: our most intelligent model that helps you learn, build and plan anything.

It comes with state-of-the-art reasoning capabilities, world-leading multimodal understanding, and enables new agentic coding experiences. 🧵 pic.twitter.com/u9nFSa4h4E

— Google DeepMind (@GoogleDeepMind) November 18, 2025

And that’s what makes features like the “My Stuff” folder so impressive.

You can toss in PDFs, lecture recordings, screenshots, photos of a messy whiteboard, whatever you’ve got, and the model pulls everything together like it’s no big deal.

You can even ask it something like:

“What did the professor say about this diagram?”

Gemini will look at your photo of the diagram, jump to the exact moment in the audio where it was discussed, and give you a clean, direct answer.

No rewinding. No hunting. No guesswork.

And it goes beyond documents.

In one demo, the AI watched a silent video of someone repairing a coffee machine.

Just from the movements and the visual clues, it identified the broken part.

No instructions. No narration. Just pure visual reasoning.

That opens doors in tech support, education, creative work, basically any field where seeing and understanding matter.

We’ve moved way past “this is a picture of a cat.”

Gemini 3 understands context, action, intention, and the mechanics behind what’s happening.

That’s the difference.

And it’s what sets this model apart from the text-heavy tools we’ve been using for years.

How It Compares to ChatGPT

So… does Gemini 3 really leave ChatGPT in the dust?

If you look at the numbers, the answer leans heavily toward yes.

On the LMArena Leaderboard, a benchmark that’s famously tough to game, Gemini 3 launched with a jaw-dropping Elo score of 1501.

That’s not a small bump.

That’s a clean leap over the best models we’ve been using, including OpenAI’s newest releases.

ChatGPT has always been the smooth talker.

Great conversational flow, great tone.

But Gemini 3 brings something ChatGPT hasn’t nailed yet: utility.

It’s not just smart. It actually does things for you. It can act as an agent.

It plugs directly into your Google life: Docs, Drive, Gmail, Search.

And its reasoning engine is so fast and so sharp that you feel the difference immediately.

ChatGPT often feels like a charming dinner guest stuck in a text box.

Gemini 3 feels like a highly competent employee who has access to your laptop, your schedule, and the entire internet, and isn’t shy about using them to solve problems.

Why You Should Switch (Or At Least Try It)

If all this sounds like hype, that’s fair.

We’ve heard big promises in the AI world before.

But Gemini 3’s features aren’t theoretical. They’re real, rolling out now, and genuinely useful.

If you’re a student, being able to upload recordings, PDFs, screenshots, and notes and have the AI cross-reference all of them is a total shift in how studying works.

If you’re a developer, the Antigravity platform and new CLI tools are basically productivity steroids. You’ll feel the difference in a single afternoon.

If you’re an everyday user, the new AI Mode in Search ends the misery of digging through ad-soaked websites just to find a simple answer.

This isn’t a “faster horse” moment. This is a car-versus-horse moment.

We’re moving from talking with bots to having bots actually work for us.

And that’s a massive shift in how we’ll use technology from here on out.

If there was ever a time to try a new model, this is it.

Some Finishing Thoughts

Gemini 3 makes one thing crystal clear: the AI race isn’t slowing down; it’s only getting started.

Google has poured its infrastructure, research power, and ecosystem into a model that doesn’t just score well on paper. It’s built to actually help people in everyday life.

With sharper reasoning, a search experience that finally feels modern, and a true understanding of images, audio, and video, Gemini 3 sets a new bar for what we should expect from an AI assistant.

Whether you’re automating your coding workflow or trying to plan a vacation without juggling 12 browser tabs, this model can step in and do the heavy lifting.

And you don’t have to take anyone’s word for it.

If you have a Google AI Pro or Ultra subscription, it’s already rolling out. Developers can jump in through Google AI Studio.

Our most anticipated launch of the year is here.

– Gemini 3, our most intelligent model

– Generative interfaces, for perfectly designed responses

– Gemini Agent, made to complete complex tasks on your behalf

See how Gemini 3 can help you learn, build & plan anything 🧵

— G3mini (@GeminiApp) November 18, 2025

Go give Gemini 3 a spin and see if you don’t find yourself leaving your old chatbot in the dust, too.